Improving the intelligence of the robot with human activity tracking

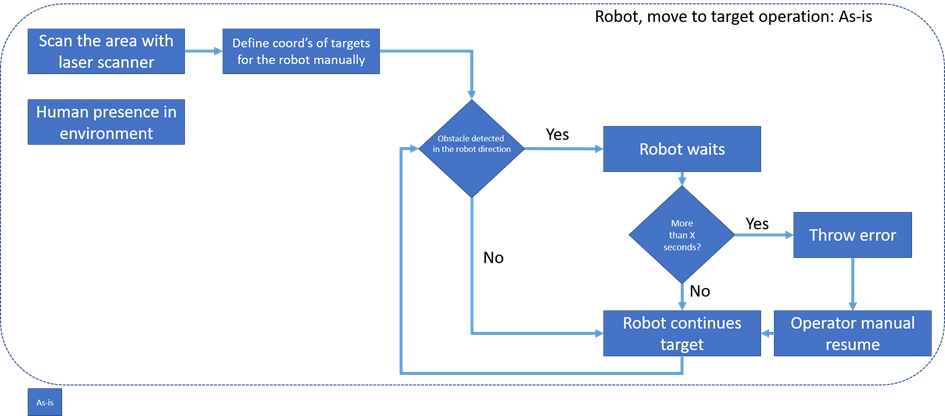

At SmartFactory-KL laboratory, the autonomous mobile robot (AMR) assists worker by transporting the product between the production islands. Currently, if there is an obstacle in the direction of moving, the robot stops and waits for a specific amount of time before moving further. However, if the object is still there, the object must be removed and AMR must be resumed, manually. The as-is scenario can be seen below:

With STAR project, we would like the AMR to reach objects and humans, differently. We use different AI techniques to make this possible. First thing is to record daily human behaviour to have an idea of different activities. We iterate the recording by including more people who are performing same activities (in the same and different order) and to collect more data to increase the accuracy. Based on the collected data, we will feed the robot with the possible next activities of the humans, online. The robot will then react to humans differently than an inanimate object. For example, if a human is likely to intersect with the route of the robot in the next two seconds, robot may take another route. Later, this use case will also be supported and merged with the cameras that are feeding live images from the environment.